Artificial intelligence is starting to act on its own.

Well, not in a sci-fi way but in actual real life. Agentic AI systems can basically decide, act and adapt without waiting for a prompt.

The jump from automation to autonomy is not just technical. It makes us ask an old question in a new way: what does it mean to trust a machine?

What Agentic AI Really Means

Agentic AI goes beyond automation. It decides what to do, not just follow scripts or respond to prompts. These agents can interpret goals, assess data, and take action inside your digital environment.

Examples:

- A cybersecurity agent that patches vulnerabilities the moment it detects them

- A logistics agent that re-routes shipments after weather disruptions

- A data agent that transfers records securely across departments

Why Trust Looks Different Now

Traditional trust in AI was simple, we used to verify the input and review the output. The machine acted inside clear lines.

However, agentic systems move faster than that. They make thousands of microdecisions a second, across systems humans can’t monitor in real time.

If we tried to slow them down for review, we would lose the value of autonomy. So instead of manual oversight, we need digital trust built into the system itself.

Together, these turn AI from a black box into a responsible participant in your digital system.

Why Digital Trust Matters More Now

Autonomous systems expand both capability and risk.

If a simple script fails, you can roll it back. If an autonomous agent acts unpredictably, the damage can multiply before anyone notices.

- Hidden actions that leave no trace

- Manipulated data sources shaping bad decisions

- Cross-system errors when agents miscommunicate

- Lack of visibility into who or what made a change

Digital trust does not remove these risks. It makes them visible and fixable.

How Agentic AI Actually Works

At its core, agentic AI runs on three functions:

- Perception (Observe): The system first observes data, events, or signals (e.g., detecting a failed transaction).

- Reasoning (Decide): Based on the observations, the system assesses the context and chooses the next action (e.g., prioritizing which issue to resolve first).

- Action (Execute): Finally, the system executes the chosen decision (e.g., restarting a process or sending a report).

Multiple agents can work together across systems. They communicate, share context, and coordinate results. That’s where trust infrastructure becomes essential, each agent must verify who it’s talking to and what data it’s receiving.

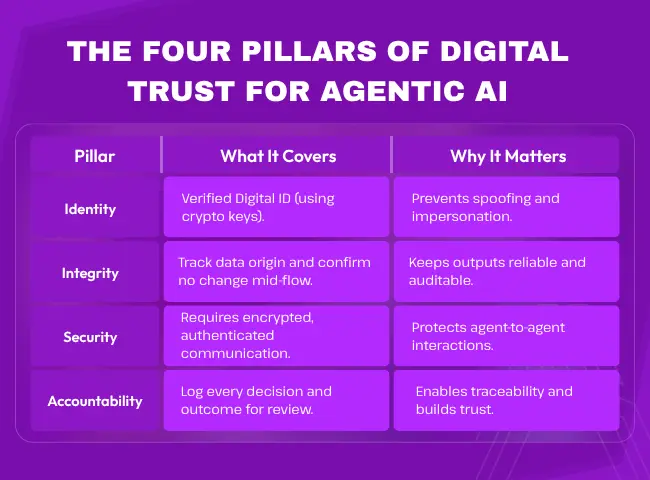

How to Build Trust in Agentic AI

Let’s make it practical.

- Define What Each Agent Can Do

Start small by giving each agent a clear job description that covers access, decision limits, and review process.

No one hires an employee without a contract so the same rule applies to AI. - Give Agents Real Identities

Every agent needs a verified digital ID.

Use certificates, cryptographic keys, or hardware-based credentials to block imposters. - Protect Every Interaction

Use secure protocols for agent-to-agent and agent-to-system communication.

Think of it like locking every door in a smart building, not because you expect a break in, but because it is careless to leave one open. - Keep an Audit Trail

Every decision, prompt, and action must be logged and time-stamped.

If something goes wrong, you can retrace steps. - Make Trust Revocable

Digital trust should never be permanent.

If an agent acts outside its rules or its credentials are compromised, you should be able to disable it instantly.

The Business Case for Digital Trust

Digital trust isn’t just about compliance. It’s about competitive advantage.

When you can prove your AI systems act responsibly, you build faster, scale safer, and face fewer legal or reputational risks.

Trust creates speed. Partners and customers are more likely to connect to systems that are auditable and secure.

Companies that invest early in verifiable AI behavior set the standard for everyone else.

Digital Trust as a Strategic Asset

Digital trust is not about compliance, it is about advantage.

Companies that build verifiable AI behavior early will scale faster and face fewer legal or reputational risks.

This proactive approach makes digital trust an extension of an ethical AI strategy, ensuring systems align with human values. When you can prove your systems act responsibly, customers and partners feel confident working with you.

That is not theory. It is good business.

The Human Role Isn’t Gone. It’s Different.

Agentic AI does not replace humans, however, it changes what humans do.

Instead of approving every move, people design the rules, monitor data, and step in when limits are crossed.

Think of it as moving from micromanaging to system managing.

Humans set the intent, AI executes, trust systems connect the two.

The Road Ahead

Agentic AI is the next step in automation. Autonomy without trust is chaos.

To make it work, design for accountability from day one.

That means:

- Verified identities

- Trusted data pipelines

- Secure communication

- Transparent logs

Do that, and agentic AI becomes an agentic partner rather than a risk.

AI is learning to act on its own. Our job is to make sure it acts responsibly.

Digital trust gives machines clear boundaries, reliable data, and built-in accountability.

That is how autonomy earns confidence. And that is how we build systems that not only act fast, but act right.